Understand who and what.

Challenge assumptions.

Ensure usability.

An undergraduate class that deeply affected me was Human Factors for Architecture. Even when I switched to graphic design, I was struck by the idea that a designer could learn what users wanted and needed. Although not a factor in meeting with my graphics customers, I always kept users in mind, especially with my technical illustration work. My interest in users crystallized during a Master's Degree class—Ethnographic Field Methods. I finally had the tools I needed. Who knew not guessing could be so empowering? And frankly, hearing from users and stakeholders was more interesting and fun than just making assumptions.

Discovery

Below is a poster I created while at Yahoo! to explain the process preceding the redesign of the Ad Systems campaign planning tools. There were many stakeholders, and the team ran on bits of information gleaned from casual conversations with the sales teams. And the product team did not talk to each other. I needed to educate the team that before designing a solution, they needed to understand the problem and the context surrounding that problem. The poster was successful, and permission was granted for an extensive research initiative.

Diagram of a proposed project's discovery phase. (See more 🔎)

Heuristic Evaluation & Documentation

Evaluating a website or application using a standard set of criteria constitutes a heuristic evaluation. While the evaluation is generally focused on the user interface, it can include the internal processes that support the user experience and the product's documentation.

One marchFIRST customer was AlphaDog, a procurement management company. As part of our response to the RFP, I performed a heuristic evaluation. and then compiled findings into chapter 2 of a document used for strategy and scoping. I was fortunate enough to present the findings of the evaluation in a meeting with the customer.

Evaluation for the client that was used during scoping meetings with the client. (See more 🔎)

Contextual Enquiry

This form of research combines the techniques of ethnographic research and user interviews. While more focused and limited than a broad ethnographic study, contextual enquiry takes the designer and other team members into a day-in-the-life of the user. Besides quietly observing the whole space the worker is in, and watching and listening to them do their job, asking them to think aloud while working allows us to hear what they are thinking and hear explanations of their actions. Without this information, designers are just guessing at worst, or relying on the feedback of executives or managers at best. While stakeholder input is vital, talking to end users will help ensure they get a product that supports their tasks efficiently and with few errors.

The second Clarify project I managed as the UX lead, ClearSupport, included extensive research before the design process began. The staff researcher and I visited three customer sites where we made observations of the users in their environments, watched participants work, and interviewed participants afterwards. The data collected was instrumental in shaping design decisions and provided backup for design decisions during debates with stakeholders, product managers, and engineering.

The most interesting finding was that users wanted, and needed, to keep multiple tickets open so they could work on them as they researched and evolved solutions. The users were frequently interrupted, were waiting for information from others, or needed to complete detailed research on one or more of the tickets. The original client-side application only allowed one ticket to be open at a time, which was inefficient, led to stale tickets, or even orphaned tickets. Our recommendation was to allow up to 8 or so tickets to be open at one time, and to use the WIP Bin (work-in-progress list) as one method to allow the user to track and access the open tickets. The researcher and I successfully presented the idea to the Product team. Users were particularly excited about this new flow and design. It was a success for contextual enquiry and thinking outside the box.

Typical workstation of a customer service representative.

Audience Segmentation & Task Analysis

Who are the users?

There is almost always more than one user type or role we must design for. Segmenting users into logical groups allows teams to better understand who they are designing for and the dynamics of task flows and actor touchpoints. Sometimes, a simple list and description are enough information for the team to do their job, while larger projects require more complex and formal documents. These products seem to be the least popular deliverables, especially for Agile teams.

- User Personas

- Mental Models

- Experience Lifecycles

What are the tasks? How do they relate?

A task flow is generally completed by one of several roles on the team. I have worked with teams where the Product Manager, or the Dev Lead, might create them, and others were UX Designers who did. Sometimes, when many task flows are interrelated as a flow something bigger might be called for.

As the Yahoo! Ad Systems designer and researcher, I noticed disconnects between task flows provided by different Product Managers. To resolve these issues I organized working sessions with the PMs where we reviewed the task flows and identified the dosconnects. But I also had to observe and interview users to understand how the work was actually completed, and their pain points. The result of this extended research was a super-task-flow, which was a talking point for working sessions and cross-functional focus groups attended by more than 100 executives, product managers, and line workers.

This deliverable probably had the most profound effect on any team in my career. It focused the team’s attention to unknown problems, and galvanized support to move on to the design phase. Eventually an implementation phase resulted in use acceptance testing in Sunnyvale, Boston, New York City, London, Paris, and Taipai.

Excerpt from the full flow, which was over 10 feet long when printed. (See more 🔎)

Guerilla Testing

To ensure solution viability, usability testing of paper or digital prototypes should be conducted before development begins, when there is still time to change the design. Even if testing can not be conducted with outside participants, using in-house participants can be invaluable.

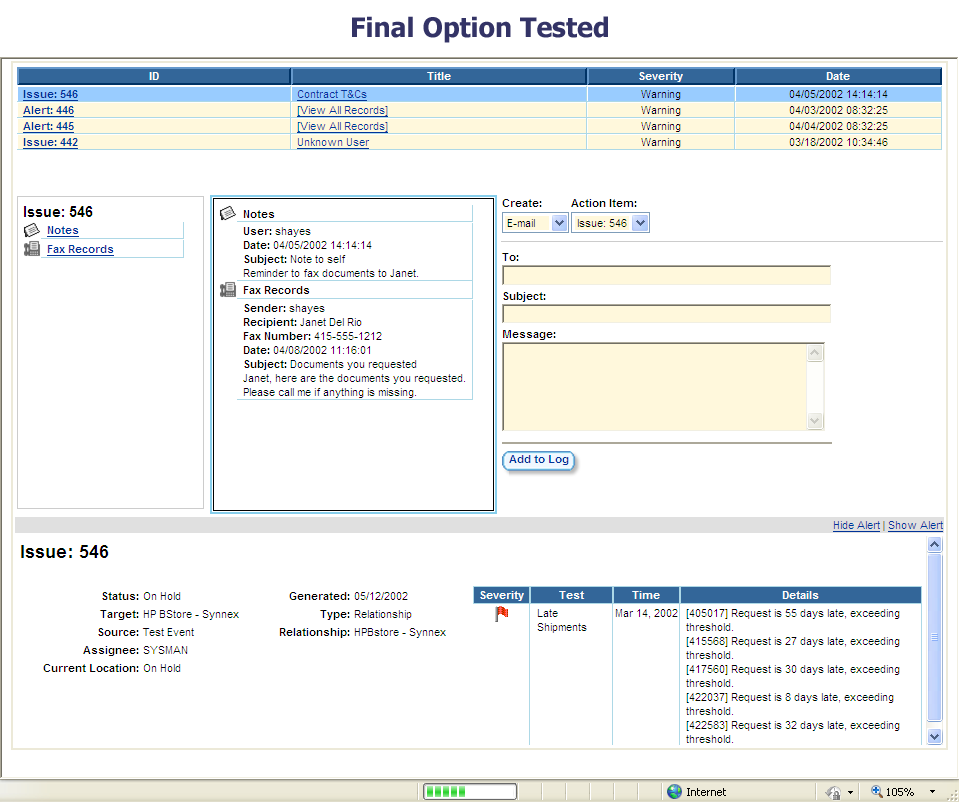

At Bizgenics I had the opportunity to be both the Product Manager and the UX Designer on one project. There was a need to bring together the multiple threads of communication that customer service issues generated: email, text, and phone call notes. After conversations with stakeholders I generated a requirements document, then generated a couple proposed designs. When presented in meetings there was no clear favorite.

|

I completed guerrilla testing of the four prototypes with 8 participants recruited from inside the company, as well as from customers who happened to visit the office. Three initial variations were tested, each with a slightly different organization of collaboration components. Results from testing showed statistical preferences for particular designs, as well as consistency of errors by participants. There was also feedback that importing issue details into the collaboration tool would greatly improve usability. The fourth design was based on feedback and tested well during the second round. |

Teaching Designers to Conduct User Testing

The first two steps were to present best practices for user research sessions and to get licenses for Morea, by TechSmith. Then the 2008 SuccessFactors User Conference in San Francisco provided the impetus for a user testing plan, and a system to recruit customer participants. The goal was to test conference participants with one to three tests in thirty minutes or less.

Working with product and UX managers, I prepared a research plan and an email template to be sent to the conference participants. Separately, I created a process for designers to submit functionality and designs to be tested. After the UX managers prioritised the test topics, I developed a framework for writing test scripts that was used by the designers whose functionality would be tested. At the same time, I pulled together the technology for administering the test and recording data. The test system included two laptop computers, Techsmith Morae, plus a video recorder and voice recorder as backups. Finally, the technology and tests were themselves tested by the user experience team–it was an exhilarating and empowering experience for all the designers.

Working with the UX team managers I created a process to request and vet functionality and designs to be tested. After UX managers prioritised the test topics I evolved a process for writing test scripts that was used by the designers whose functionality would be tested. At the same time I pulled together the technology for administering the test and recording data. The test system included two laptop computers, Techsmith Morae, plus a video recorder and cassette recorder as backups. Finally, the technology and tests were themselves tested by the user experience team.

Participants were recruited through the SuccessFactors user site and emails before the conference. As well, during the conference, subjects were recruited from the vendor exhibit floor. The two-day event was successful, with 12 customer participants and over 30 tests given. After the event, transcripts were made from the Morae recordings, and clips of each test's salient moments were turned into a video. Analysis of the test results was gathered into presentations given to cross-functional team members.

After the event transcripts were made from the Morae recordings and clips of each test's salient moments were turned into a video. Analysis of the test results were gathered into presentations given to cross-functional team members.

Remote Testing

Following the Successfactors user conference I expanded the team's testing repertoire by creating a remote testing protocol using simple web conferencing tools. Low cost or even free, these tools provide recordings of user's screen activity and voice. The resulting files can be viewed by team members for analysis. Another benefit of using web conference tools is the opportunity for other team members to observe.

Set up and practice with the test rig (L). Getting ready to open the test lab door at the conference (R).

Site Map

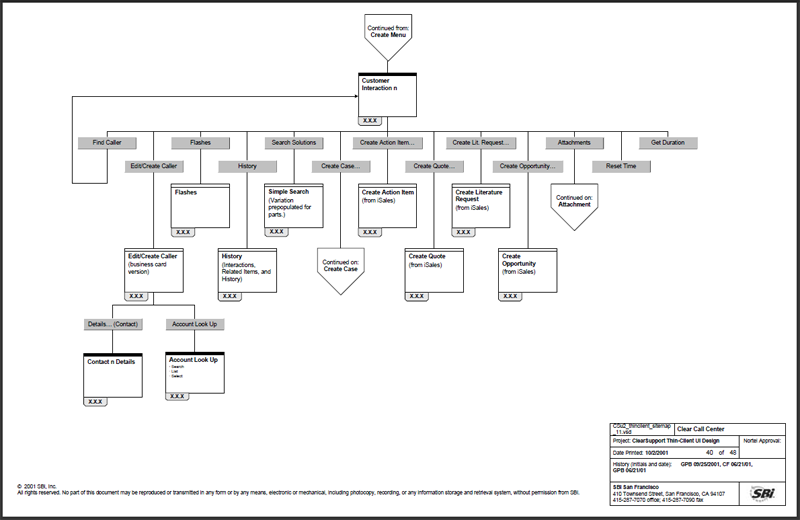

Company: marchFIRST; Customer: Clarify; Project: Site Map for Clear Support Web Application conversion.

Site Map for Clear Support Web Application conversion.(See more 🔎)